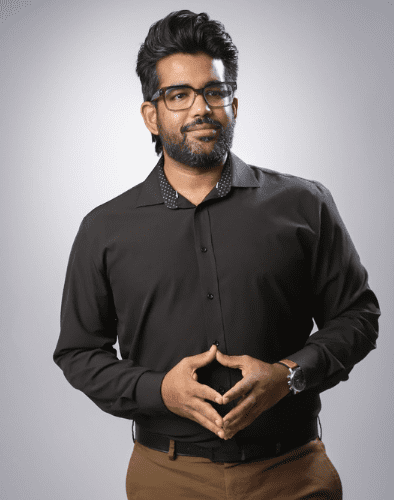

In regulated healthcare, the hardest part of scaling AI is often not the model, it is the data boundary. The data privacy management software market is projected to grow from $5.37 billion in 2025 to $45.13 billion in 2032, a signal that governance is moving from policy decks into production systems. Karthik Reddy Kachana, IT Director and Enterprise Architect at Abbott Labs, has spent the last few years living inside that reality, where a single complaint record can carry health data, national residency obligations, and real operational urgency. As an independent editorial board member for Frontiers in Emerging Technology at APEC Publisher, he approaches this space with a practical bias: build constraints that teams can actually run. This article shares his perspective on what data residency now means for global AI programs, and what it will take to scale safely from here.

When Geography Stops Being a Hosting Detail

“People think of residency as a checkbox until the day it blocks a patient support workflow,” Kachana says. “Once you are dealing with regulated data, geography becomes part of the product. If you cannot prove where data lives and who can touch it, you do not have a scalable system.” That shift is accelerating as enforcement hardens, including penalties that can reach 5% of annual turnover in China’s regime. Location now decides legality.

For Abbott, the pressure was not theoretical. Abbott’s primary global customer service platform, a Salesforce Service Cloud platform used to manage medical complaints for the organization’s flagship diabetes care portfolio, handled sensitive records that included personal health data from customers in China and Russia, even while the system itself was hosted on a Paris instance. Kachana led the strategy and delivery to localize operations without shutting down complaint intake, hitting China go live in March 2023 and Russia go live in March 2024 as enforcement expectations tightened.

Keeping One Global System While Localizing What Matters

Once teams accept that residency is real, the next question is how to avoid turning every country into its own separate stack. That is where most global programs stumble, because fragmentation quietly kills reuse. Privacy law coverage is already broad, with 144 countries now having data protection and privacy laws, which means today’s “one off” design becomes tomorrow’s repeated cost.

Kachana’s approach was to keep Abbott’s primary global customer service platform operational globally while isolating what truly needed to stay in the country. He remembers describing a moment that made the tradeoff painfully clear: a late week review where the team realized they could meet the letter of the law by splitting systems, but they would lose unified quality tracking overnight. “That was the point where I pushed hard for field level segregation,” he says. “We needed a clean boundary for PII, but we could not afford to fracture the operating model.” He vetted third party options and selected InCountry, then designed a pattern where sensitive fields could be stored locally while global agents could still work the non sensitive parts of the workflow. It also prevented an estimated $20–30 million in IT duplication costs by avoiding separate Salesforce orgs and duplicated complaint infrastructure.

Risk Looks Different When the Data Is Health Related

As soon as sensitive healthcare records are involved, “good enough” controls become a liability. Even before you talk about fines, the operational exposure is stark. The global average cost of a data breach is $4.88 million, and that number is a reminder that failures show up as real budget events, not abstract risk registers. This is where global programs get hurt.

For Kachana, the goal was continuity with proof. The localization framework allowed Abbott to continue regulated operations in China and Russia instead of freezing complaint intake or degrading support for diabetic patients. The impact of this work quantified what was at stake: preserving uninterrupted market access in high-growth territories that contribute significantly to the multi-billion dollar global revenue of the portfolio. Midway through the program, he also took on an external evaluator’s lens as a Devpost hackathon judge, a role that reinforced his bias toward designs that can be explained, tested, and defended under scrutiny.

The Human Constraint Behind Compliance

It is easy to narrate residency as legal theory until you are staring at the downstream effects. When health support systems fail, people do not just lose convenience, they lose time, confidence, and sometimes access. Nobody wants that call to end with “we cannot see your record.” It is a small sentence with big consequences.

Kachana’s localization design protected continuity for regional complaint intake teams while still keeping global quality tracking intact. The work also captured the patient side of the equation: without support for the organization’s flagship diabetes care portfolio in the market, patients could have faced more than $100 per month in additional costs for alternative CGMs or a return to older glucose testing methods. “The ethical part is not separate from the technical part,” he says. “If you stop serving patients because your data model cannot meet residency rules, you have built the wrong system.”

What Comes Next for Sovereign Ready AI Programs

Residency is also becoming the platform decision that shapes AI feasibility, because models inherit the constraints of their inputs. Governments and regulators are effectively pulling infrastructure toward sovereign patterns, and the government cloud market alone is expected to reach $41.56 billion in 2025, on the way to materially larger footprints by 2030. That direction of travel matters: programs that treat residency as an afterthought will keep discovering limits late, when rewrites are the most expensive.

At Abbott, Kachana’s next step was to translate these constraints into a reusable operating model for AI experimentation. Through the AI Center of Excellence for Architecture and a Salesforce AI innovation hub, his team standardized intake and sandboxing so pilots moved faster without improvising governance each time. The work impact quantified the shift: time to build AI pilots dropped by 70%, and sales reps reduced pre call planning time by 30–40% using Copilot features in the prototypes. He also continues to evaluate practical business value as a Business Intelligence judge, which keeps his bar simple: if a control cannot be followed under pressure, it is not a control.

“The future is sovereign by default,” Kachana says. “If you want AI at enterprise scale, you design for residency, auditability, and reuse first. Then the models can actually ship.”