Retail data platforms sit at the center of how modern retail runs. Shopper expectations have moved to immediate availability and fast fulfillment across stores and digital channels, promotions create sharp spikes and supply conditions change mid-cycle. Privacy shifts and AI-driven personalization push more decisioning onto first-party data, raising event volume and the penalty for stale or conflicting records. As a result, these platforms carry decisions that move money every hour: what to stock, where to ship and how much capital to tie up at each location. Resilience means short signal-to-decision time, a consistent view of product, location and inventory in motion, and recovery plans that keep the business operating when components fail.

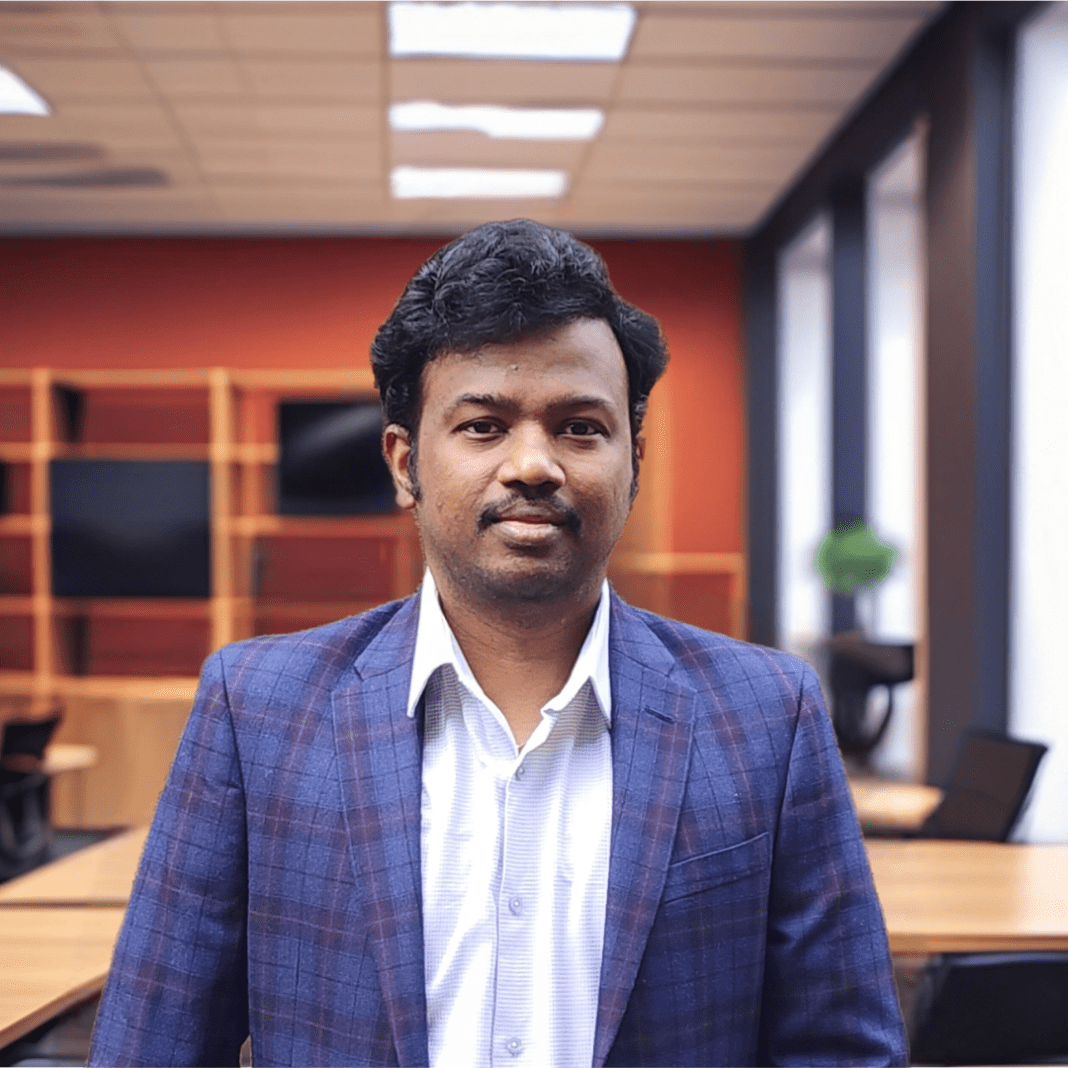

Balakrishna Aitha, a Lead Data Engineer at a major national U.S. retailer, and a Senior IEEE panel reviewer, builds for that reality. His operating principle is simple: design pipelines that stay available under stress, keep latency within budget and show business teams exactly what changed and why.

From Demand Signals to Store-Level Action

Building on the need for trustworthy signals at the edge, the first priority for every large retailer is to turn product and location data into a single, analytics-ready view. Inventory distortion remains one of the industry’s most expensive problems, with global losses at $1.7 trillion in 2024: $1.2 trillion from out-of-stocks and $554 billion from overstocks. At the same time, U.S. e-commerce sales in the fourth quarter of 2024 reached $352.9 billion, or 17.9 percent of total retail activity, showing how omnichannel complexity now amplifies every delay or mismatch between demand and supply.

At a leading national retailer operating hundreds of physical stores alongside a fast-growing online business, Aitha led the design of a cloud-native forecasting and replenishment platform that integrated store, supplier and transactional data into a single real-time decision layer. Using Google Cloud Dataflow for large-scale batch and stream processing, Pub/Sub for continuous ingest and BigQuery for predictive analytics, his team enabled planners to view 52-week forward demand at SKU and location level while tracking 52 weeks of history. Automated reconciliation through Apache Airflow DAGs and Tableau dashboards replaced manual spreadsheets and shortened forecast cycles from days to hours. The platform reduced out-of-stocks, improved inventory turnover and cut carrying costs across more than 600 locations.

“Forecasting only helps if the data is current and trustworthy. The goal was to make every replenishment decision fast, factual and verifiable,” says Aitha.

Low-Latency Streaming That Holds Under Pressure

Extending from data quality to time-to-insight, large retailers now depend on two managed layers that must hold steady under burst traffic: an event streaming service for continuous ingest and a cloud analytical store for near-real-time queries. Modern managed event streaming targets a monthly uptime objective of 99.95% for event ingress, while cloud data warehouses target 99.99% monthly uptime for non-Standard tiers. Those service levels define the reliability envelope within which teams set end-to-end latency budgets and freshness expectations.

Availability targets alone do not guarantee fresh insights. Promotions and seasonal peaks stress backpressure limits, retry behavior and window choices that can turn current events into delayed decisions. The operating goal is to keep traversal time from capture to query inside a predictable budget so store and SKU decisions reflect the latest truth even when concurrency spikes. Beyond his work in production systems, Aitha also served as a judge at Business Intelligence Group 2025 Stratus Awards.

At a major U.S. retailer with a nationwide store footprint and a large e-commerce channel, Aitha runs failure-aware orchestration rather than a single linear path. Cloud Composer schedules Apache Airflow DAGs that manage inter-pipeline dependencies, automatic retries and controlled backfills so delayed events do not cascade into stale downstream tables. A specialized historical-correction pipeline fixes bad records without manual intervention, while autoscaling Dataflow jobs keep transformation throughput within the latency budget during bursts. Pub/Sub provides continuous ingest so consumers see current datasets in BigQuery even when upstream systems lag.

“You cannot wish away bursts. You budget latency, you design for backpressure and you give operators simple, tested levers when something fails,” notes Aitha.

Disaster Recovery for Real-Time Orders

Moving from analytics to mission-critical transactions, order ecosystems have a narrower failure tolerance. The cost profile is clear: in recent global surveys, 54 percent of significant outages exceed $100,000 and 20 percent top $1 million. That expense profile justifies a recovery posture that treats cross-domain order flows as a protected service, contrary to a best-effort pipeline.

Aitha led disaster recovery enablement for an enterprise transaction platform that processes over 8 million events daily and 300,000 every 30 minutes at peak, spanning order capture, management and fulfillment across more than 20 applications. He executed a migration to a cloud-based DR environment with real-time replication and orchestrated failover, validating throughput under peak loads and preserving message order and data fidelity.

“Recovery is a product requirement. You design for the day everything fails, then you prove it under load until the runbook is muscle memory,” says Aitha.

Operating to Peak Standards

With recovery in place, the day-to-day test is peak season. Holiday demand concentrates traffic, orders and returns. In 2024, core retail holiday sales reached $994.1 billion, a record that reflects broad category strength. Online spend alone reached $241.4 billion, which pushes ingestion and replay windows to the foreground as concurrency spikes.

Those peaks are the natural time to test capacity models, backpressure handling and replay strategy. For a nationwide retailer operating across hundreds of stores and multiple distribution centers, Aitha’s engineering teams coordinate forecasting and transaction pipelines that process multi-channel data in real time, linking store, digital and supply-chain systems through a hybrid batch-and-streaming framework. Automated validation jobs and service-level monitoring detect lag or data drift within seconds, triggering dynamic scaling in Google Cloud Dataflow and Pub/Sub to preserve latency budgets. Weekly operational reviews before major sales events align engineering, analytics and merchandising groups around shared performance metrics and risk thresholds. His selection for the Raptors Fellowship reflects that focus on pairing engineering rigor with disciplined, real-world operations.

“Operational excellence is far from glamorous. It is runbooks, alerts that tell the truth and a rhythm that lets business partners sleep,” observes Aitha.

Looking Ahead: Resilience as Infrastructure

As reliability moves from aspiration to baseline, investment is shifting to platforms that scale clean data and predictable latency. By 2030, cloud revenues are projected to reach $2 trillion, while data centers worldwide may require $6.7 trillion in capital to keep pace with compute and AI demands. In that environment, resilience becomes part of cost control and part of customer experience. Aitha continues to advance these standards through his published technical work on resilient distributed systems.

“The mandate is simple. Keep the system available, keep it fast and make every decision traceable,” notes Aitha.